At Eedi, our goal is to help teachers uncover and address student misconceptions in maths. But what if the models we use to track student learning are missing key signals, especially when students get answers wrong? In our latest paper, ’Uncertainty-Aware Knowledge Tracing Models’, we explore how predictive uncertainty can reveal when these models are likely to make mistakes.

Knowledge Tracing (KT) models are used to estimate what a student knows based on their past answers. They help personalise learning by predicting how a student might respond to future questions. But they’re not perfect, especially when the model’s predictive confidence is low.

The insight: it’s not just about what the model predicts, but how sure it is. By making uncertainty visible, we open up new ways to catch misconceptions early and support every learner more effectively.

Imagine a student is asked to multiply two fractions. The model predicts they will answer correctly. But when the student adds the numerators and denominators instead, it reveals a common misconception.

At first glance, this looks like a simple prediction error. Yet the model’s own behaviour tells a deeper story. Its confidence in that prediction was low, a sign that something was uncertain. Traditional KT models ignore this uncertainty, focusing instead on whether the prediction was right or wrong.

But uncertainty carries information. When we overlook this signal, we lose an opportunity to detect misconceptions. In short, models don’t just make errors, they have associated predictive uncertainty. The challenge is learning how to use it.

To go beyond point predictions, we re-design Knowledge Tracing models to estimate their own uncertainty using Monte Carlo Dropout (MC-Dropout).

At inference time, we run the model multiple times with dropout enabled, generating a distribution over possible outcomes. From this, we calculate predictive entropy which is a measure of how confident or uncertain the model is in its prediction:

Low entropy means the model is confident and high entropy signals uncertainty. This gives us a way to quantify when the model is unsure.

We apply this MC-Dropout technique across three commonly used and one newly proposed KT architecture. We also extend them to predict not just correctness but the specific distractor a student is likely to choose. The final result is a more detailed, uncertainty-aware view of student understanding. This can can help surface misconceptions early and guide better interventions.

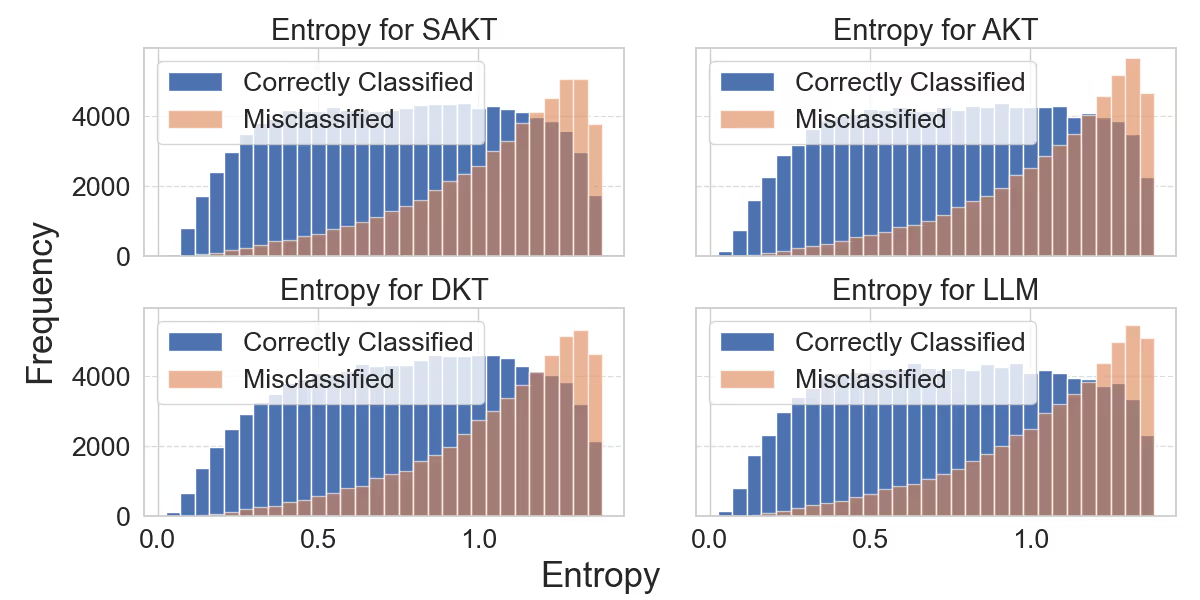

We analysed the total entropy of the model predictions to see if this is a valuable uncertainty metric for Knowledge Tracing model. We were interested in seeing to what extent the model can say that it isn’t confident in its predictions, and if the uncertainty is larger when the model is also incorrect about the response a student chooses. The results show that the model has higher entropy when it misclassifies the students response. This is great news as the model can tell us when it is not confident and likely to make an incorrect prediction for a student.

At Eedi we are building the intelligence layer for Edtech platforms to help diagnose misconceptions. This means we not only want to predict if a student will answer a question correctly or if they have a specific misconception, but we want to know how confident our models are in their predictions. By developing models that provide a measure of uncertainty in their predictions we can better avoid making incorrect predictions. This is pedagogically important as we don’t want an incorrect prediction to result in a misconception going undetected.

This research highlights an important direction for Edtech platforms using Knowledge Tracing models to power their misconception diagnosis or personalised learning pathway predictions. By utilising uncertainty in Knowledge Tracing models we can avoid incorrect predictions, which can avoid student misconceptions going undetected.

We hope this work opens up a new and exciting research direction in Knowledge Tracing models to find even better ways to determine when a model is uncertain in its predictions.

The full paper is available at the following link: Uncertainty-Aware Knowledge Tracing Models